When AI makes headlines, all too often it’s because of problems with bias and fairness. Some of the most infamous issues have to do with facial recognition, policing, and health care, but across many industries and applications, we’ve seen missteps where machine learning is contributing to creating a society where some groups or individuals are disadvantaged. So how do we develop AI systems that help make decisions leading to fair and equitable outcomes? At Fiddler, we’ve found that it starts with a clear understanding of bias and fairness in AI. So let’s explain what we mean when we use these terms, along with some examples.

What is bias in AI?

Bias can exist in many shapes and forms, and can be introduced at any stage in the model development pipeline. At a fundamental level, bias is inherently present in the world around us and encoded into our society. We can’t directly solve the bias in the world. On the other hand, we can take measures to weed out bias from our data, our model, and our human review process.

Bias in data

Bias in data can show up in several forms. Here are the top offenders:

Historical bias is the already existing bias in the world that has seeped into our data. This bias can occur even given perfect sampling environments and feature selection, and tends to show up for groups that have been historically disadvantaged or excluded. Historical bias is illustrated by the 2016 paper “Man is to Computer Programmer as Woman is to Homemaker,” whose authors showed that word embeddings trained on Google News articles exhibit and in fact perpetuate gender-based stereotypes in society.

Representation bias is a bit different—this happens from the way we define and sample a population to create a dataset. For example, the data used to train Amazon’s facial recognition was mostly based on white faces, leading to issues detecting darker-skinned faces. Another example of representation bias is datasets collected through smartphone apps, which can end up underrepresenting lower-income or older demographics.

Measurement bias occurs when choosing or collecting features or labels to use in predictive models. Data that’s easily available is often a noisy proxy for the actual features or labels of interest. Furthermore, measurement processes and data quality often vary across groups. As AI is used for more and more applications, like predictive policing, this bias can have a severe negative impact on people’s lives. In a 2016 report, ProPublica investigated predictive policing and found that the use of proxy measurements in predicting recidivism (the likelihood that someone will commit another crime) can lead to black defendants getting harsher sentences than white defendants for the same crime.

Bias in modeling

Even if we have perfect data, our modeling methods can introduce bias. Here are two common ways this manifests:

Evaluation bias occurs during model iteration and evaluation. A model is optimized using training data, but its quality is often measured against certain benchmarks. Bias can arise when these benchmarks do not represent the general population, or are not appropriate for the way the model will be used.

Aggregation bias arises during model construction where distinct populations are inappropriately combined. There are many AI applications where the population of interest is heterogeneous, and a single model is unlikely to suit all groups. One example is health care. For diagnosing and monitoring diabetes, models have historically used levels of Hemoglobin AIc (HbAIc) to make their predictions. However, a 2019 paper showed that these levels differ in complicated ways across ethnicities, and a single model for all populations is bound to exhibit bias.

Bias in human review

Even if your model is making correct predictions, a human reviewer can introduce their own biases when they decide whether to accept or disregard a model’s prediction. For example, a human reviewer might override a correct model prediction based on their own systemic bias, saying something to the effect of, “I know that demographic, and they never perform well.”

What is fairness?

In many ways, bias and fairness in AI are two sides of the same coin. While there is no universally agreed upon definition for fairness, we can broadly define fairness as the absence of prejudice or preference for an individual or group based on their characteristics. Keeping this in mind, let’s walk through an example of how a machine learning algorithm can run into issues with fairness.

Fairness in AI Example

Imagine we create a binary classification model, where we believe we have very accurate predictions:

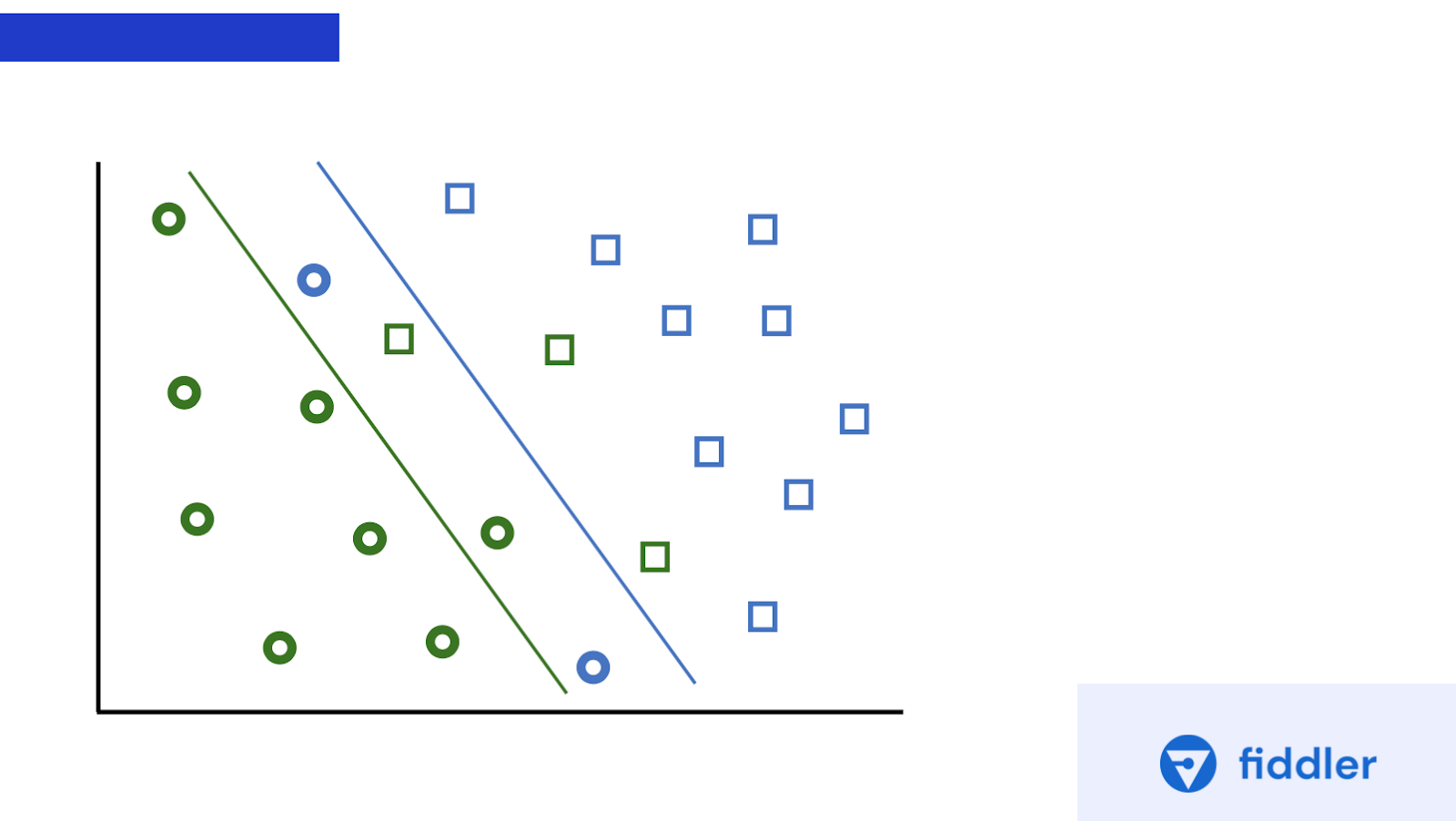

Fairness can come into question if this data actually included two different underlying groups, a green and blue group. These groups could represent different ethnicities, genders, or even geographical or temporal differences like morning and evening users.

This fairness problem could be the result of aggregation bias, like in the previously mentioned example of treating diabetes patients as one homogenous group. In this case, using a single threshold for these two groups would lead to poor health outcomes.

Best practices

To address this issue with fairness, a best practice is to make sure that your predictions are calibrated for each group. If your model’s scores aren’t calibrated for each group, it’s likely that you’re systemically overestimating or underestimating the probability of the outcome for one of your groups.

In addition to group calibration, you may decide to create separate models and decision boundaries for each group. This is fairer for our groups than a single threshold.

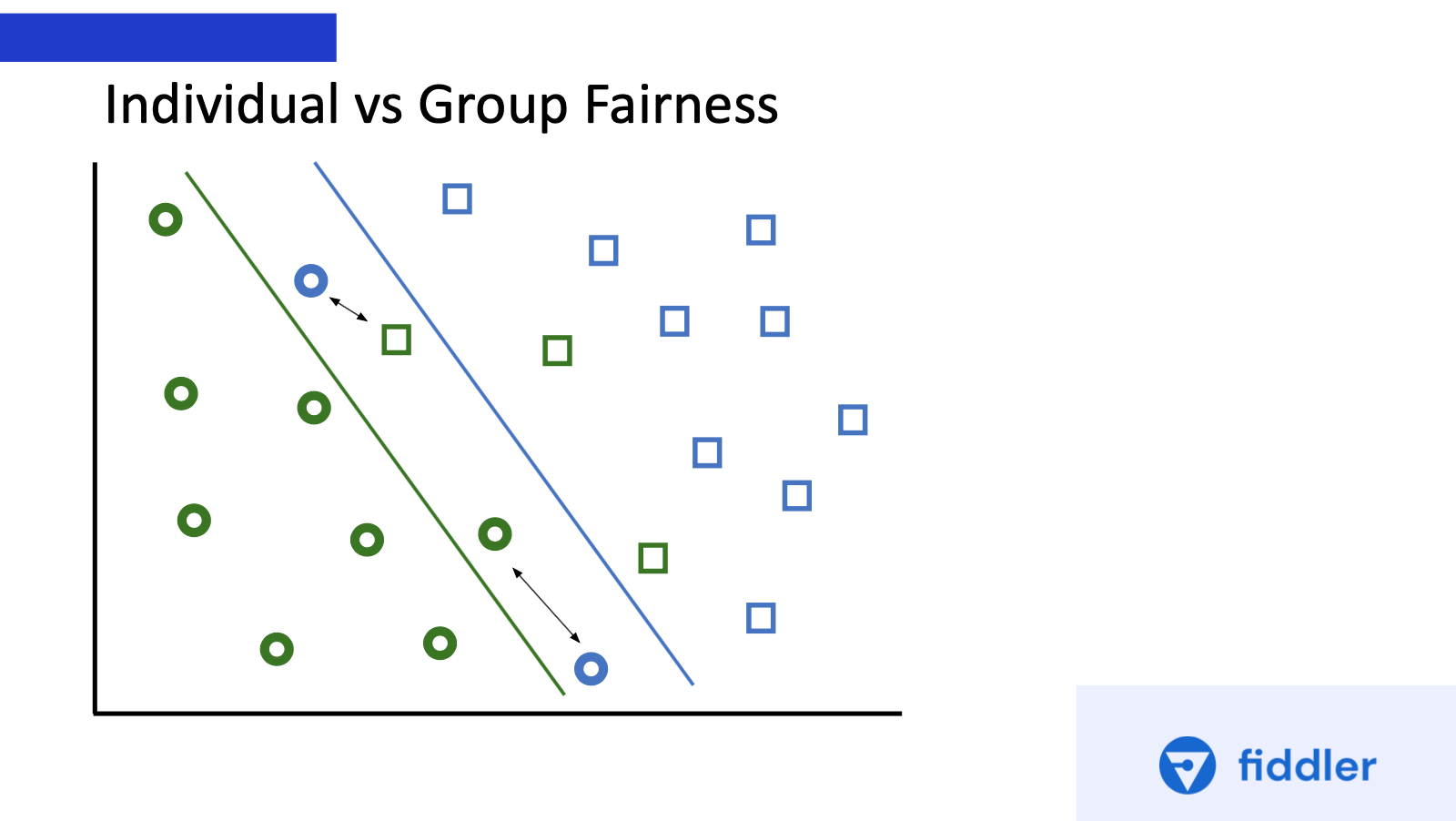

However, creating additional thresholds can lead to a new problem: individual fairness. In the chart below, the blue and green individuals share almost all the same characteristics, but are being treated completely differently by the AI system. As you can see, there is often a tension between group and individual fairness.

As illustrated, bias and fairness in AI is a developing field and more companies, especially in the regulated industries, are investing more to establish well-governed practices. We will continue to introduce more concepts around fairness, including intersectional fairness, and show how different metrics can be used to address these problems.